29.11.23

Is there true technological progress if it isn’t ethical?

Last summer, the movie Oppenheimer challenged the public with this question by displaying the ethical dilemma of a great

nuclear physicist being asked to put his knowledge and expertise to use in the development of nuclear weapons. Today, the

disruptive potential of AI is compared to the impact that nuclear energy and its dangers had on society eighty years ago.

This post discusses the role of ethics in guiding technological advancement and introduces a useful tool to assess and

prevent ethical risks of AI systems. In many application fields, the unregulated use of AI systems could result in discrimination,

violation of fundamental rights, and societal harm. However, AI systems’ unethical consequences aren’t typically deliberate

and emerge due to inadequate controls, lack of oversight, poor explainability, and incorrect deployment. So, there may

be technological advancement, but no true progress without ethical awareness.

Avoidance of physical and moral harm is feasible through adequate risk prevention measures like ensuring data quality,

system robustness, and secure, inclusive usage in all applications. Prevention of ethical risks motivates ongoing developments

in the field of AI regulation, including the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence

by President Biden and current AI Act negotiations in Europe. In anticipation of upcoming regulations, companies need

to assess the ethical impact of their AI applications and take adequate risk mitigation measures.

We at SKAD assist businesses with this complex task. Our AI Governance team offers an Ethical

Impact Assessment (EIA) based on the most actual research in the field of AI ethics and trustworthiness.

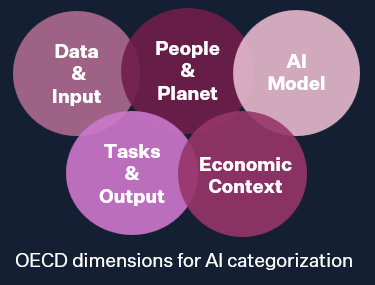

Our assessment incorporates guidelines by leading regulatory actors and NGOs such as the EU High Level

Expert Group on AI, the Global Partnership on Artificial Intelligence, OECD, and UNESCO. Through the

EIA we provide our clients with a systematic method to recognize the ethically relevant features of an

AI system and evaluate its impact in real-world application scenarios all along its life cycle.

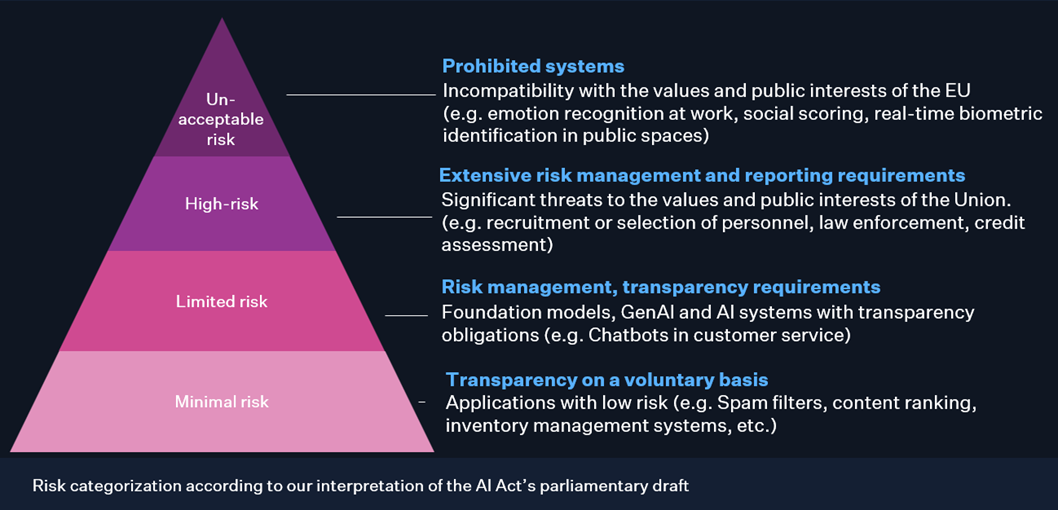

The first step of the EIA is the categorization of the AI system based on its technical and

socioeconomic features. This step is necessary to define distinct risks across application scenarios

and to frame the system in a risk matrix. In the European regulatory framework, this includes classifying

the system in one of the four risk categories: “unacceptable risk”, “high risk”, “limited risk”, "minimal risk”.

Please note that there is no such thing as “no risk”. Every AI application carries potential risks that

might be underestimated in the development stage, and that’s why this first step is so important also for systems that are apparently unproblematic.

The second step of the EIA is a stakeholder analysis aimed at identifying individuals and groups

that have interests or rights that may be directly or indirectly impacted by the AI application, or

that possess relevant characteristics that put them in positions of advantage or vulnerability. This is

necessary to understand different perspectives and address varied interests concerning the development

and use of the AI system. This step also includes possible strategies for improved stakeholder engagement.

Based on the AI-system categorization and on the stakeholder analysis, as a third step we perform a risk assessment

in different ethically relevant audit areas. For our purpose, the audit areas we selected correspond to those listed

in the EU High Level Expert Group (HLEG) Trustworthy AI assessment list, which are mirrored by the general

principles applicable to all AI systems as described in the current Parliament Version of the AI Act (art. 4a):

Once potential risks have been identified, the fourth step involves conducting a trade-off analysis.

This is a process where we weigh the pros and cons of different corrective measures. In this context, we are

evaluating possible conflicts between different risk management measures, and examining how solutions intended

to mitigate a certain risk in one audit area might unintentionally increase risk in another area. Well known

examples of this are the fairness-accuracy and the explainability-accuracy trade-offs.

Following the assessment of risks and trade-offs based on the system categorization and stakeholder

analysis, we identify applicable ethical and regulatory requirements for the AI system under evaluation.

Subsequently, we establish risk management and compliance measures. This constitutes the final output of the EIA.

While performing an EIA is essential to individuate and prevent possible harmful outcomes of high risk systems,

it is important to note that even those systems expected to be classified as low-risk would benefit from this procedure.

Indeed, underestimating possible negative ethical impact on individuals and society can have severe consequences for

users’ trust and corporate image. Integrating an EIA in the strategic development of a product can prevent the emergence

of ethical issues after its release and help deployers better understand future ethical challenges.

Stay tuned for the next post, where we delve into the specifics of AI system classification and stakeholder assessments,

and explore their dynamics in different AI application scenarios. Don't miss out on this valuable insight into the world of responsible AI!